OpenAI released its most advanced AI model yet, called o1, for paying users on Thursday. The launch kicked off the company’s “12 Days of OpenAI” event—a dozen consecutive releases to celebrate the holiday season.

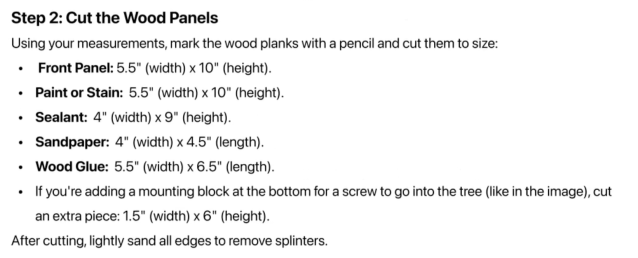

OpenAI has touted o1’s “complex reasoning” capabilities, and announced on Thursday that unlimited access to the model would cost $200 per month. In the video the company released to show the model’s strengths, a user uploads a picture of a wooden birdhouse and asks the model for advice on how to build a similar one. The model “thinks” for a short period and then spits out what on the surface appears to be a comprehensive set of instructions.

Close examination reveals the instructions to be almost useless. The AI measures the amount of paint, glue, and sealant required for the task in inches. It only gives the dimensions for the front panel of the birdhouse, and no others. It recommends cutting a piece of sandpaper to another set of dimensions, for no apparent reason. And in a separate part of the list of instructions, it says “the exact dimensions are as follows…” and then proceeds to give no exact dimensions.

“You would know just as much about building the birdhouse from the image as you would the text, which kind of defeats the whole purpose of the AI tool,” says James Filus, the director of the Institute of Carpenters, a U.K.-based trade body, in an email. He notes that the list of materials includes nails, but the list of tools required does not include a hammer, and that the cost of building the simple birdhouse would be “nowhere near” the $20-50 estimated by o1. “Simply saying ‘install a small hinge’ doesn’t really cover what’s perhaps the most complex part of the design,” he adds, referring to a different part of the video that purports to explain how to add an opening roof to the birdhouse.

OpenAI did not immediately respond to a request for comment.

It’s just the latest example of an AI product demo doing the opposite of its intended purpose. Last year, a Google advert for an AI-assisted search tool mistakenly said that the James Webb telescope had made a discovery it had not, a gaffe that sent the company’s stock price plummeting. More recently, an updated version of a similar Google tool told early users that it was safe to eat rocks, and that they could use glue to stick cheese to their pizza.

OpenAI’s o1, which according to public benchmarks is its most capable model to date, takes a different approach than ChatGPT for answering questions. It is still essentially a very advanced next-word predictor, trained using machine learning on billions of words of text from the Internet and beyond. But instead of immediately spitting out words in response to a prompt, it uses a technique called “chain of thought” reasoning to essentially “think” about an answer for a period of time behind the scenes, and then gives its answer only after that. This technique often yields more accurate answers than having a model spit out an answer reflexively, and OpenAI has touted o1’s reasoning capabilities—especially when it comes to math and coding. It can answer 78% of PhD-level science questions accurately, according to data that OpenAI published alongside a preview version of the model released in September.

But clearly some basic logical errors can still slip through.